In this article

As any seasoned hospitality professional may know, getting your disparate systems to speak the same language is incredibly important when it comes to optimising restaurant operations and driving continuous performance improvement. Fragmented solutions and data silos are the enemy of hospitality businesses who strive to streamline operations and deliver a positive customer experience.

That’s why data integration in the world of hospitality is so crucial.

Data integration is connecting your operational data to your revenue data, for example integrating point-of-sale and labour scheduler. If you don’t, it becomes next to impossible to understand how each element of your operations is affecting the others. These integrations are built between systems to enable this.

Data normalisation comes into play here when you have multiple systems on the go at the same time: if you’re using two different versions of a system (eg 2 points-of-sales) or if you’re switching from one system to another.

By normalising the data from the POS systems, hospitality organisations can establish a unified, standardised data model. This enables accurate reporting, integrations with other business systems, and the ability to derive meaningful, data-driven insights that can drive strategic decision-making and operational improvements.

However, normalising this data is no easy task: disparate data structures, inconsistent formats, and high transaction volumes all contribute to the complexity of this undertaking.

In this article, we’ll explore the importance of data integration and normalisation, the challenges that come with it, and how Tenzo can help your business overcome these obstacles and unlock the true value of your data.

Understanding Data Integration and Normalisation

Data integration at Tenzo is the process of combining data from multiple, disparate sources into a unified, centralised system (like a data warehouse). This allows organisations to gain a comprehensive view of their operations, customer behaviour, and business performance. However, the data being integrated often comes in various formats, structures, and levels of quality, necessitating a process called data normalisation.

Data normalisation is the act of organising and restructuring data to eliminate redundancies, inconsistencies, and irregularities. This ensures that the integrated data adheres to a predefined set of rules and standards, meaning that the data can be compared despite the fact it comes from multiple different sources.

Why is Data Integration and Normalisation Important?

In the hospitality industry, where margins are often slim and competition is fierce, the ability to leverage data-driven insights can make all the difference. By integrating and normalising data from your various systems, you can unlock a wealth of benefits, including:

- Easily comparable data – When you normalise data to one schema, you can compare and contrast data regardless of whether one system saves discounts at the ticket level and another at the item level.

- System flexibility – You have the freedom to use whatever system fits the site. So if you operate in multiple territories and the systems available to you are different (usually for legal reasons) or if your business simply outgrows a system and you need to move to a new one, then you can still get cohesive reporting and comparison.

- One reporting language and source of truth – you don’t need to learn 10 different reporting systems and the quirks that come with them (whether they include VAT or service charge, for example). You know that if you’re looking at a unified and normalised set of data, everything matches.

The Complexities of Data Integration and Normalisation

While the benefits of data integration and normalisation are clear, the technical challenges involved should not be underestimated. Some of the key hurdles include:

- Disparate Data Structures: Each of your systems, whether it’s your POS, inventory management, or labour, likely has its own unique data model and schema, making it difficult to consolidate. Not to mention the fact that these are subject to change and changes to the API can have a big impact on pre-built integrations.

- Inconsistent Data Formats: Different date/time representations, currency, and other data types across your systems can complicate the process. Manual inputs can also prove challenging if massive anomalies are detected – eg someone put too many zeros into the till – they need to be removed in the normalisation process.

- High Transaction Volumes: Hospitality businesses, especially chains, can generate millions of transactions annually, requiring scalable data integration solutions.

- Integration with External Systems: Seamlessly connecting your data with third-party systems, such as labour or inventory platforms, adds an extra layer of complexity.

Let’s dig a little deeper into its complexity

One of the main challenges is the disparate data structures across the various point-of-sale (POS) systems used by hospitality businesses, especially restaurant chains with multiple locations. Each POS system has its own unique data model and schema, resulting in highly denormalised, nested data structures that require significant transformation and mapping efforts.

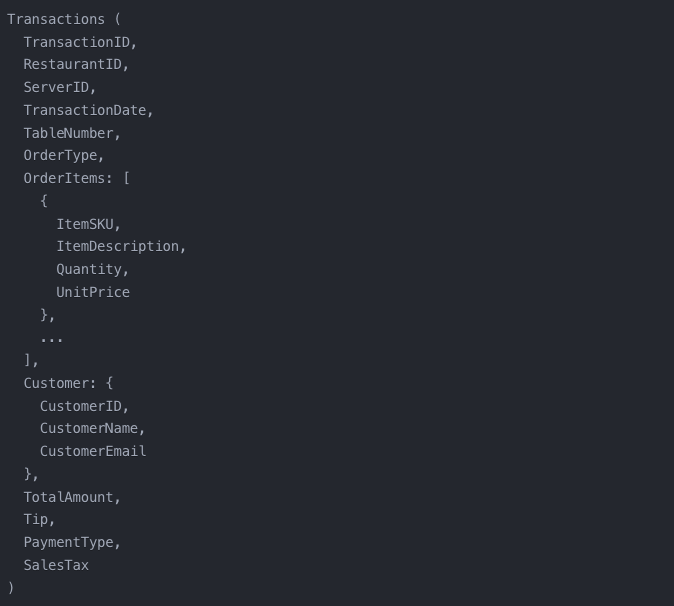

For example, consider a scenario where one POS system stores transaction data in a single, denormalised table, with all the order details, menu items, and customer information nested within the same record:

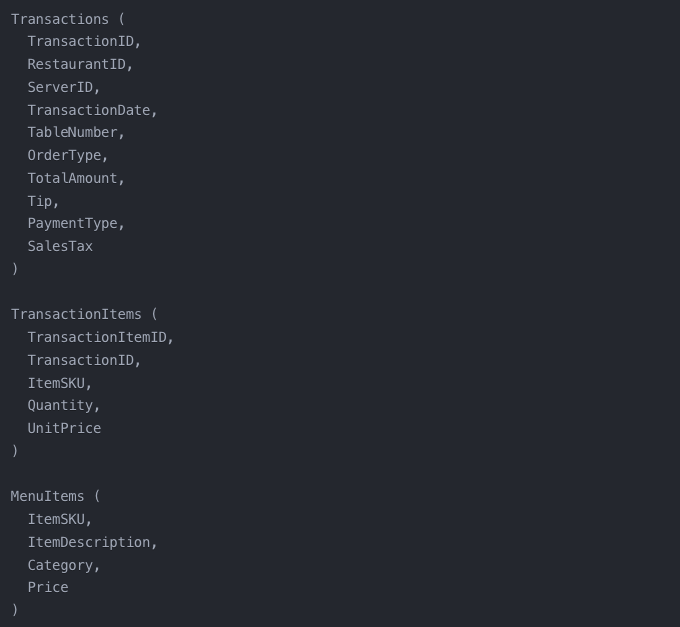

However, another POS system may have a more normalised data structure, with separate tables for transactions, order items, and menu items, but with potentially inconsistent relationships and data quality issues:

Reconciling these disparate data structures and normalising the data into a unified, consistent format is a complex and technically challenging task. It often requires the implementation of advanced data mapping, transformation, and schema integration techniques to ensure data integrity and seamless reporting across the organisation.

Another layer of complexity comes from the need to handle inconsistent data formats and representations across the different POS systems. Differences in date/time formats, currency, tax calculations, and other data types make the data integration process even more complicated and introduce potential data quality issues.

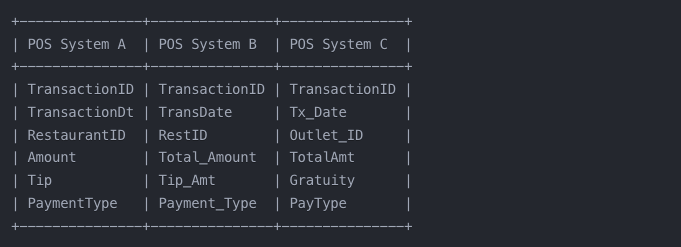

Take a look at this diagram, which shows the problems when integrating data from multiple POS systems with different data formats and representations:

In this scenario, the data integration process would need to account for the various naming conventions, data types, and representations to produce a consistent and reliable data model.

The sheer volume of transactions generated by hospitality businesses, especially large restaurant chains, can create significant technical challenges. Handling millions of transactions per year requires the implementation of scalable data integration pipelines. These often require distributed computing frameworks, incremental data processing, and advanced data partitioning techniques. On top of this, it will need to be real-time as this is the only way to use data to really influence performance. Without real-time data, meaningful changes can’t be made.

Lastly, the need to integrate the normalised POS data with other critical business systems, such as inventory management and labour scheduling platforms (if they are not pre-integrated or don’t have the level of granularity you need), adds more complexity to the process. Developing the necessary data exchange mechanisms, APIs, and transformation logic to facilitate seamless data flow between these disparate systems can be a daunting technical undertaking.

In summary, the technical complexities of data integration and normalisation in the hospitality industry stem from the lack of standardisation across the data sources, the need to handle inconsistent data formats and representations, the massive transaction volumes, and the requirement to integrate with a wide range of business systems. Overcoming these challenges requires a comprehensive, strategic approach, as well as the adoption of advanced data integration techniques and technologies.

The Tenzo way

Normalisation is our bread and butter. We have 80+ integrations now and we use our unique experience and algorithms to normalise the data from each one of them in a scalable manner, so once it’s been done anyone using that specific system can benefit from the process.

This step in our development process happens as part of our ETL (Extract, Transform and Load) pipeline. We leverage the power of Airflow 2.0 to manage our ETL infrastructure, handling thousands of tasks per hour.

Data normalisation is a core element of our ‘Transform’ and ‘Load’ steps, where we map data from diverse terminologies to a unified schema, ensuring the analysis is streamlined and loaded to our central data warehouse.

We can do this at scale

One of the common problem challenges we solve for our customers is migrating from one system to another.

As an example, imagine a chain of 50 restaurants all using the same POS system. The POS system comes to them and says their version is being retired. They therefore need to move to a new system in a short time period (2-3 sites per day) while maintaining sales reporting across two systems to keep historical data and continue to receive real-time feedback.

The key challenges of switching from one system to another include:

- Inflexible data structure and schema in the current POS system, making it difficult to extract and transform the data

- Inconsistent data formatting and representation across the different restaurant locations (e.g., date/time, currency, taxes)

- Lack of standardised business rules and reporting requirements, leading to inconsistent data quality and integrity

- Difficulty in integrating the current POS data with new data sources or external systems (e.g., customer loyalty programs, inventory management)

- Potential loss of past data and transaction history during the migration to the new POS system, which could impact historical reporting, trend analysis etc.

Consolidating and normalising the data from the original POS system is crucial to successfully implement the new system. This ensures data integrity, enables accurate reporting, and provides a unified view of the business operations across all locations, while also minimising the risk of losing critical historical data.

Without a 3rd party solution, to solve the above challenge, you need to:

- Extract the data from the source (POS)

- Transform the data and map it according to the new data model

- Load the data into the new data model

Technical Challenges

The technical challenge in normalising and integrating the POS data for a chain of 50 restaurants can be significant considering the volume of transactions recorded on a daily basis.

Assuming each restaurant generates an average of 2,000 transactions per day, the chain would have:

- 50 restaurants x 2,000 transactions per day = 100,000 transactions per day

- 100,000 transactions per day x 365 days per year = 36.5 million transactions per year

- 36.5 million transactions per year x 3 years = 109.5 million transactions to be normalised

This large volume of data, alongside data quality issues, inconsistencies, and business-specific rules can make this process particularly tricky.

The technical challenges encountered:

- Developing scalable ETL (Extract, Transform, Load) pipelines to process the high volume of data

- Implementing robust deduplication and record linkage algorithms to consolidate the data across multiple locations

- Designing the target data model and schema to optimise query performance and reporting capabilities

- Integrating the normalised POS data with other business systems, like inventory management and customer loyalty programs (passthrough integrations).

- Ensuring the data integration process is resilient, with mechanisms for error handling, retries, and data validation

With Tenzo, the transition is very smooth as thanks to our data normalisation process. Users don’t have to consider any of these issues: we seamlessly aggregate the disparate data sources.

Beyond POS Data

Normalisation complexities extend to other restaurant data domains such as:

- Inventory data from stock management systems

- Customer feedback from various platforms

- Labour data from rota tools

The Buy vs. Build Decision

When it comes to data integration and normalisation, businesses often think they need to build their own business intelligence solution to be able to connect disparate data sources. However, there is another way: Tenzo.

If you’re wondering how Tenzo compares to data warehousing and visualisation solutions, check out our comparison blog.

By choosing Tenzo, you can:

- Accelerate Time-to-Value: Tenzo’s 80+ integrations and out-of-the-box features enable a much faster integration process compared to building a custom solution from scratch which can often take years.

- Reduce Development and Maintenance Costs: Developing and maintaining a robust data integration platform in-house can be resource-intensive and costly especially when APIs change and edge cases need to be handled. Tenzo proactively takes care of these issues without user input.

- Access to Expertise: Tenzo’s team of data integration experts ensures that your data is handled with the highest standards of quality and security.

- Scalability and Flexibility: As your business grows and your data needs evolve, Tenzo’s scalable architecture and regular feature updates allow you to adapt and stay ahead of the curve.

Conclusion

In the fast-paced and competitive hospitality industry, the ability to integrate and normalise data from multiple sources is no longer a nice-to-have, but a strategic must. By leveraging a market-leading platform like Tenzo, your business can overcome the technical complexities of data integration, unlock the full potential of your data, and gain a significant competitive advantage in the process.